This week Darko posted a very interesting podcast on the topic of digital audio:

Nothing in the podcast is a new revelation, but it pulls together many important concepts and ideas all in one place. It’s not overly technical, but you do have to stop and think about the content to digest it.

Here’s my too long TL;DR of the podcast (with a little commentary on my part)

Digital Audio Waveform Clock / Timing Generation:

Digital audio file transfer only guarantees that the amplitude data (16-bit or 24-bit data) is exactly right, none of the bits are lost or are missing. The original timing information between each audio sample is completely lost in the storage and transfer of the digital file. It must be regenerated to playback the original audio waveform.

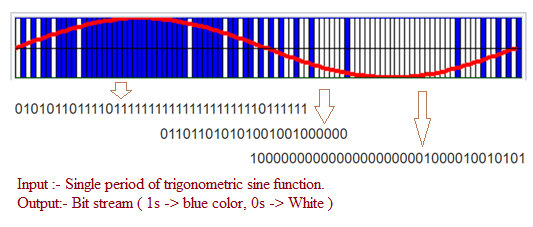

Once the necessary clock has been generated and synchronized to the data, this digital bitstream (containing both clock and data) behaves similarly to an analog waveform, containing both amplitude (bits) and frequency (clock) information. And just as an analog audio waveform is distorted by any modulation of it’s amplitude or frequency, so a digital audio waveform is also distorted by modulation of it’s amplitude or frequency.

It is unlikely for noise in the synchronized data stream to be so severe that it corrupts the data bits. If this happens the auditory effect is painfully obvious. But frequency information is very susceptible to modulation due to noise, resulting in timing shifts in each bit. The amplitude is absolutely correct, but the frequency may be wrong.

Transferring digital audio over SPDIF, TOSLINK, AES, or I2S are all synchronous transfer methods - meaning that both clock and data are embedded in the bitstream. As such, any distortion of the digital waveform has potential to impact sonic quality.

Transferring digital audio over ethernet or USB is an asynchronous activity - meaning no clock data is present, only data bits. This allows for error correction, re-ordering of packets, and requesting of retransmission of missed packets. Once an asynchronous transfer is completed (or buffered sufficiently), the data is converted to a synchronous stream with a locally generated clock and passed on to the digital-to-analog converter block (typically via internal I2S) for conversion to an analog waveform.

Having a high quality (ie low jitter, low drift) clock at the digital-to-analog converter is fundamental to achieving a high quality analog output. Creation of this high quality clock is done in stages, starting with assembly of the data from various asynchronous transfers (streaming over the internet, streaming from a media server, transfer via USB), going through various synchronization steps (generation of the reference clock, alignment of the clock to the data, filtering / buffering of the synchronized data), and ending with the synchronized bit stream entering the D2A. To the extent that this clock matches the original clock from when the data was recorded, the result can be accurate.

Power and Ground Noise

The second half of this story is power and ground noise. Digital data transmission and processing can bring with it a lot of analog power and ground noise. Digital systems tend to be very noise immune, and take advantage of this by using cheap, lower cost power supplies and board/component designs. But A2D converters are VERY noise sensitive. Any noise accumulated through the digital data transmission process, or clock/data synchronization process, can adversely impact the analog output of the D2A conversion. This is where high quality power supplies, galvanic isolation, quality cables, and other “low noise” devices and best practices come into play.

For example, using a LPS on a network streamer won’t reduce the likelihood of a bit being lost or improve the quality of the clock being generated. But it will reduce the amount of analog noise riding along with the digital bit stream. How much influence this analog noise has on the end result depends a lot on the internal DAC architecture and layout. Maybe the amount of noise is inconsequential compared to other noise already present. Or maybe the DAC internally filters this noise very well, and it doesn’t matter. Whatever the case, less noise in is always a good thing.

Another example is using a network server such as a Roon Core to stream Qobuz. The server buffers the asynchronously transmitted data packets, and sequences them so what is sent over your home network to the Roon endpoint is better oriented (fewer re-transmit requests, fewer error corrections, etc). The digital activity in the endpoint is reduced compared to streaming directly from Qobuz to the end point. Thereby reducing some of the digital supply / ground noise generated. When the endpoint is the DAC itself (ie a streaming DAC), this additional digital activity (streaming directly over the internet, instead of using a quality media streamer on the local network) can add up to adversely influence the sonic quality.

Some of these effects are certainly “mouse nuts”. But when getting to the absolute top end of resolving systems, even “mouse nuts” can become significant.